As I write this article, I’m looking over at the hundreds of vinyl records on my shelf. I realize I’m an analog soul living in a very digital world. But there’s a magic power I’ve developed over the course of 15 years as a DJ – knowing what song to play when and for whom. This is an idea that when applied to personal audio, or voice-controlled intelligent personal assistant services such as Google Home, can help connect music to audiences at the right time.

Human Algorithms

DJs – as the great British ethnomusicologist John Blacking would have said – are talented listeners. We’ve developed, through experience and necessity, the ability to tag songs based on their emotional job. Does the mood need to be changed? Lets try happy. Steve Wonder – “Sir Duke.” A recent breakup? The Dramatics – “In the Rain.” A great last song to make people feel at ease and not get into drunken fights on their way out of a bar at the end of a night? Bob Marley, “Jammin.” Or Serge Gainsbourg’s “Lola Rastaquouere”

And while this functionality may serve as theory for media studies, it’s important to remember content jobs differ across cultures. For example, there are sounds produced by some cultures, that while sounding musical to western ears, are not themselves considered music. The Muslim call to prayer known as the Adhan, for example. However, that in itself is valuable information. After all, we learn not just by observing and understanding how sounds are created but also by understanding our relationship to them – even as an outsider.

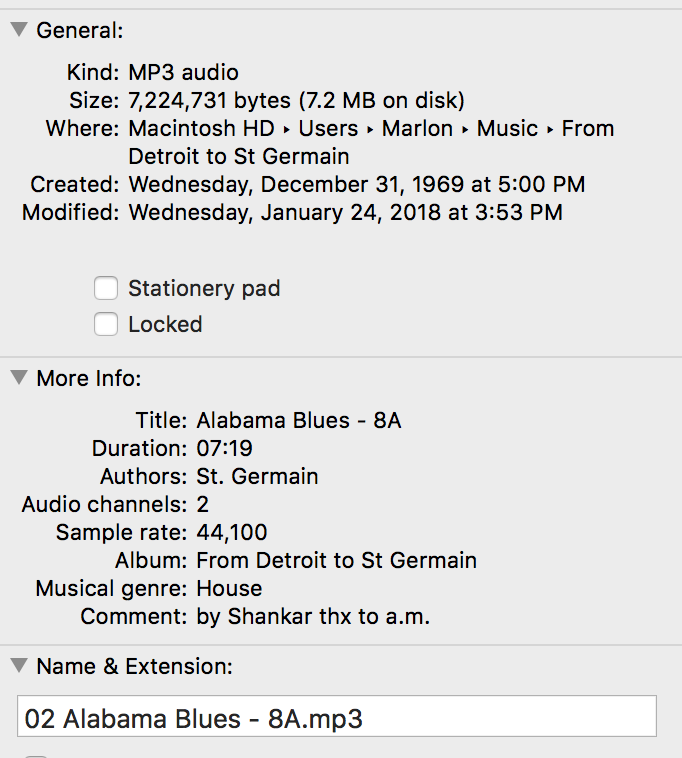

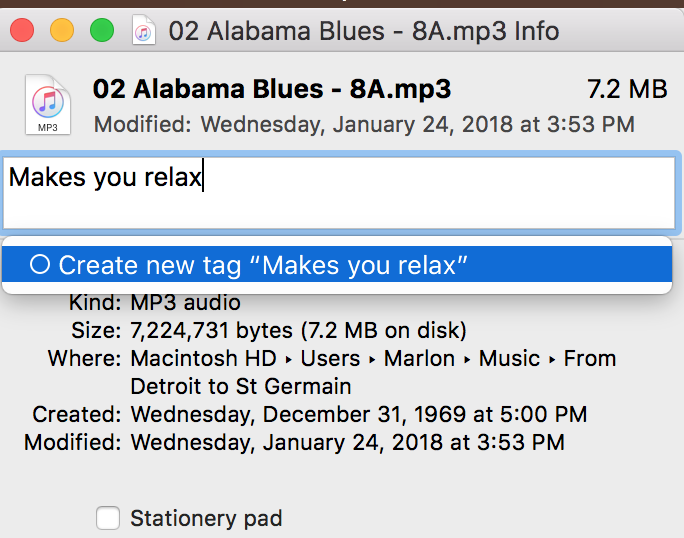

Metadata Can Go Further

This is a typical .mp3 tag used for organizing your music on itunes. It contains the basic information of a song. Similarly, most people create online videos, immersive media, and other types of content using word counts, duration, hardware specificities et.

DJ Thinking

By mapping the relationship of the song to the needs of an audience, we can begin to teach the Alexa’s and Siri’s of the digital world how to connect us to music when we need it, within the context of our emotional state, the environmental state, or that which we are hoping to affect. One of the big challenges today is that many people may not know what to ask for, or ask in a way – “hey Alexa, play me a James Brown song.” – that gives AI little to work with in terms of decision making. Which James Brown song? And so we can begin to use our own personal data (with consent) to personalize our music experience. Sounds good, huh? Our own personal DJ who knows when to change the mood.

The idea of using emotional metadata is also core to the way BuzzFeed is approaching its creation of viral content. As a producer at BuzzFeed, you will always hear the phrase, “What is the job of this ____?”

Spotify is another company digging deep into our relationship with music through explorations into what makes a song danceable, how acoustic it is, and even a measure of its happiness, called valence. But these attributes only provide clues to the level of intuition a seasoned DJ has.

How do we continue formalizing new approaches to metadata?

The work being done by linguists such as Ruth Jeannine Brillman at Spotify in Boston is an example of how we are bringing together the best minds from computer science and linguistics in order to innovate the way we interact with music streaming services.

“Software companies and linguists look at language in very different ways,” she says. “You need people with a computer science background to design and program these platforms, but you also need people who understand how to treat the more nuanced components of language so you can really understand what the user is asking for.”

Through this exercise, I believe we will learn a great deal not only about our relationship to sound but also how our cultures and languages provide the foundation for musical experience. However, I believe we have an opportunity to continue delving deeper into these relationships and consider functionality of music and ways that media can potentially affect our health in positive ways.

If we believe in a song’s power to bring us together, if we believe in the idea that VR or film is the ultimate empathy machine, we have no choice but to evolve our technical thinking in how we catalog the world’s media toward the function it has on society.

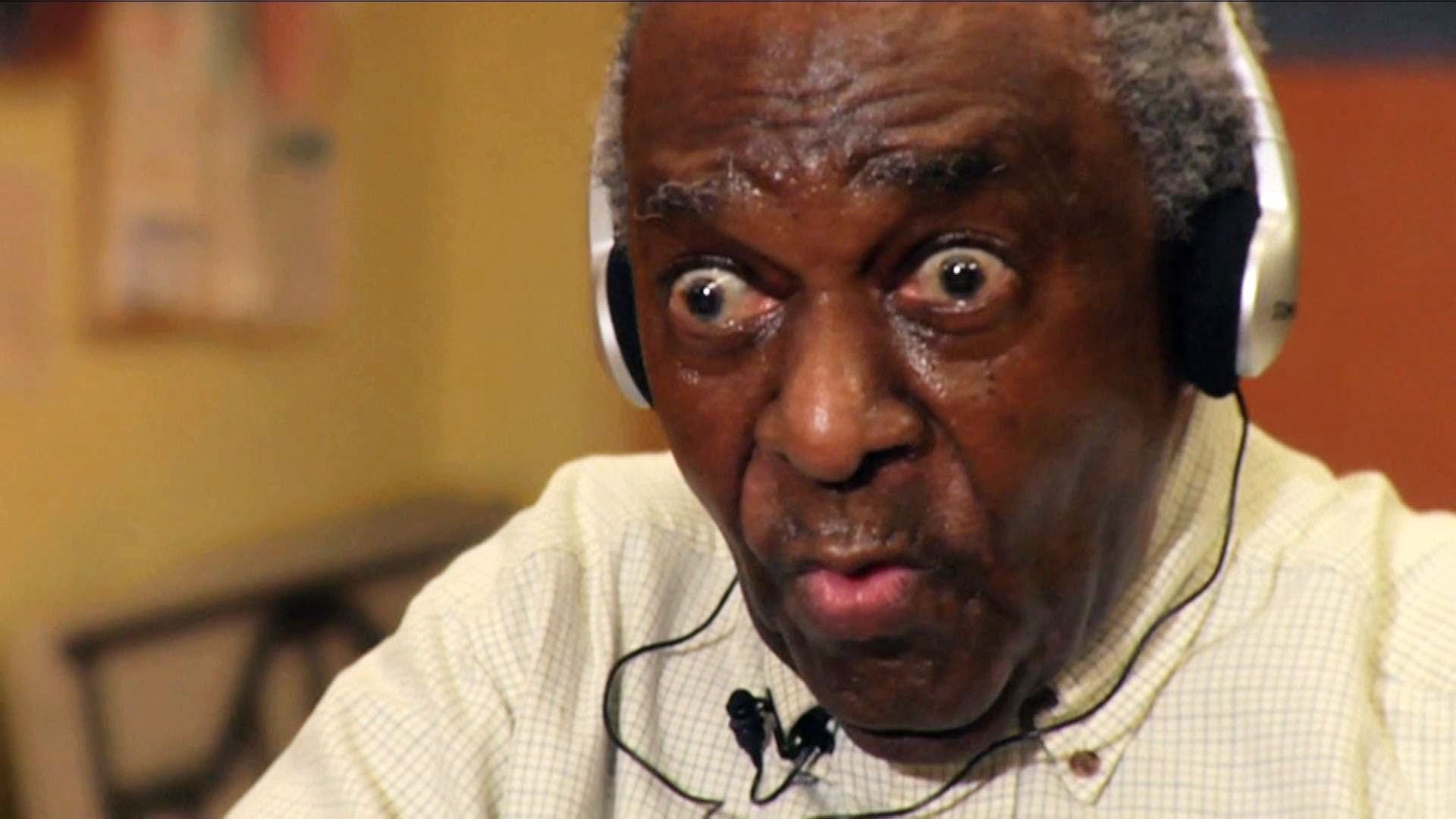

The benefits can create operational efficiencies such as what we’re seeing at BuzzFeed, or assist in the work being done to document and explore the benefits of music with patients suffering from alzheimer’s. Overall, this is an effort that will require not only engineering, business, and data skills, but also cultural competency. If you’re up for the challenge, drop me a line.

Continue the conversation on Linkedin!

Mini case studies and things

Dolby.io and 3D Hologram Calling

As I write this article, I’m looking over at the hundreds of vinyl records on my shelf. I realize I’m […]

Read More ›Afrobeats and the Grammys at Nigeria Music Week

As I write this article, I’m looking over at the hundreds of vinyl records on my shelf. I realize I’m […]

Read More ›Music Discovery for Better Grammy Awards

As I write this article, I’m looking over at the hundreds of vinyl records on my shelf. I realize I’m […]

Read More ›